Loading

Artificial intelligence has long been a reality in Switzerland: almost half of the population already uses AI tools – among younger people, the figure is over 80%. But while private use is booming, companies are lagging behind: only a fraction of them have clear AI strategies. The biggest risk? Shadow AI. More than half of employees worldwide use unauthorised AI applications – often with sensitive data. This shadow usage is also growing rapidly in Switzerland, jeopardising compliance and security. Companies must act now to balance innovation and governance.

AI caught between innovation, risk and costs

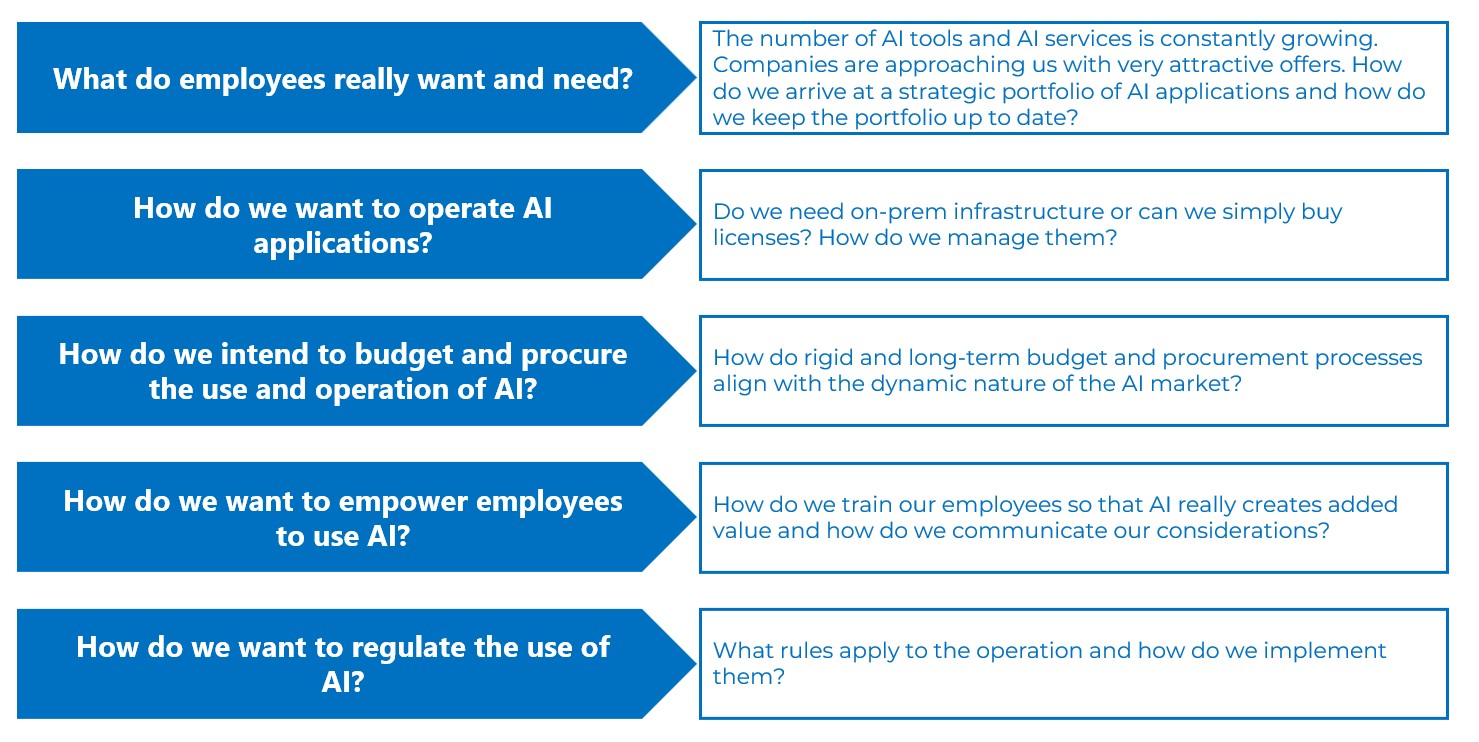

This challenge is exacerbated by the extraordinarily dynamic development of the AI landscape. New models, services and providers are emerging at a pace that overwhelms traditional IT governance processes. At the same time, this puts even more pressure on IT budgets, while the scope for investment remains limited. The following challenges arise in particular in the conflict between innovation, risk and costs:

- Employees come up with new ideas for the use of AI applications almost daily and want to use them as they already do in their private lives.

- Managers do not want to miss out on the strategic connection to this key technology. They receive offers from companies that appear very attractive at first glance and are often overwhelmed when it comes to making a critical assessment.

- IT organisations are often not yet ready for the secure operation and integration of AI applications. The challenge: for decision-makers, the main challenge now is to find a balance in the ‘magic triangle’ of innovation, risk and cost. They need solutions that both unleash the innovative power of their organisation and make the associated risks and costs manageable. The key lies in well-thought-out AI governance that combines flexibility and control.

Key risks associated with the use of generative AI

The use of generative AI often entails a number of risks that are not always apparent to everyone and are rarely actively managed:

- Shadow AI: Unregulated use by employees leads to loss of control and security risks

- Uncontrolled costs: A lack of rules for licences, token usage and cloud contracts can blow budgets

- Compliance and data protection risks: Unclear or missing guidelines to prevent the transfer of sensitive data to external providers

- Strategic dependencies: The uncoordinated use of many tools creates dependencies that are difficult to control

A central platform for central AI services to support balanced AI governance

Successfully introducing generative AI requires an approach that enables the accumulation of experience, preserves flexibility and ensures independence – all with minimal investment.

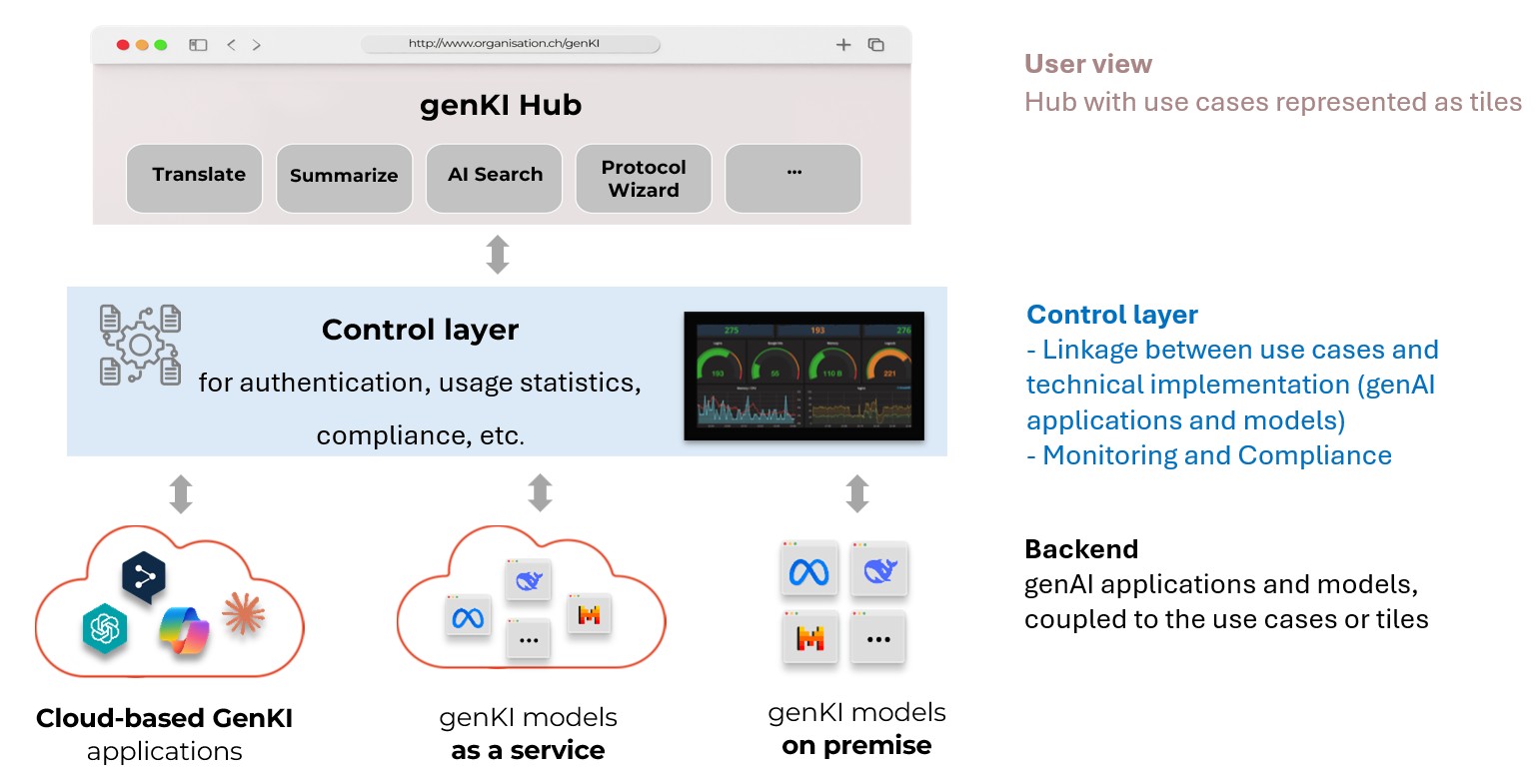

The pragmatic approach of a ‘platform for central AI services’ combines three layers:

- User interface: A central platform as a uniform access point and service catalogue for all AI use cases. It abstracts technical complexity and offers standardised services via the intranet.

- Control layer mediates between use cases and technical implementation. It enables control and monitoring and secures the foundations for safe, cost-efficient deployment.

- Technical implementation (backend): Flexible architecture for implementing AI services – from cloud solutions (‘AI as a Service’) to hosted models and on-premise options.

Advantages of the architecture for the AI platform

- Strategic control: the AI platform allows different technical implementation options to be tested against each other and evaluated centrally. The modular concept also allows for the gradual integration of new functions and use cases.

- Procurement optimisation: the AI platform allows important information such as token consumption, effective user demand for use cases, etc. to be collected. The manageable investment volume required for the platform prevents misallocation and optimises the procurement of an operational platform at scale. Since the AI platform also enables the use of genKI models from different providers and the integration of multiple licences, the negotiating position vis-à-vis providers is improved.

- Cost control: the AI platform's dashboard and the integration of IAM systems such as Active Directory not only allow access to the platform to be controlled, but also enable effective usage to be measured accurately. This allows for granular cost control as opposed to a ‘hit or miss’ approach to procuring licences or infrastructure.

- Risk management: As part of the implementation of use cases, individual risks can be identified and assessed, and the control layer of the AI platform allows certain compliance rules to be enforced.

Relevance and limitations of a ‘platform for central AI services’

Implementing an AI platform based on the architecture outlined above helps organisations gain initial experience with generative AI. It provides centralised, easy access for employees. It also simplifies administration, helps optimise costs and reduces the risk of shadow AI.

Such an AI platform also has its limitations:

- No substitute for AI strategy - the AI platform does not replace an overarching AI strategy and governance structures. Governance structures and process transformation must be defined separately.

- Choosing suitable use cases - a potential analysis is necessary in advance in order to use such an AI platform sensibly. The restriction to individual use cases must be appropriate for the organisation. The proposed architecture is particularly necessary if AI is to be used on confidential or secret data. However, if the use of integrated solutions such as CoPilot or AI agents is possible, i.e. if data protection requirements and legal guidelines allow it, the use of such an AI platform makes little sense. Therefore, a potential analysis should be carried out in advance to determine for which use cases or user groups this approach is relevant.

- No substitute for change management - even though the AI platform makes it easier to get started with AI technologies, additional change management measures are still required. In addition to courses on the value-adding use of AI for all employees, AI courses for managers are also relevant in order to understand the strategic and organisational challenges of AI use and its opportunities, and to have the basis for making decisions on this topic.

Conclusion: AI governance as a strategic competitive advantage?

Effective AI governance does not hinder innovation – it enables it. Organisations that invest in well-thought-out governance today secure a strategic advantage: they can implement AI innovations faster, more securely and more cost-effectively than their competitors.

The key advantage of our approach lies in the combination of centralised control and operational flexibility: while governance processes minimise risks and make costs transparent, the flexible backend architecture enables rapid adaptation to new technologies and market developments.

In a world where AI is becoming a differentiating factor, professional governance is not a ‘nice-to-have’ but a strategic must.

Would you like to learn more? Contact our team of experts at ELCA Advisory to discuss the relevance of AI governance for your organisation. We look forward to hearing from you!

Meet the author : Nadine TSCHICHOLD-GÜRMAN

Practice Leader Public Sector & Professional Services

Nadine Tschichold leads our Public Sector & Professional Services practices. She is committed to driving innovation and digital transformation within the public sector in Switzerland, covering the federal government, cantons and municipalities. Prior to joining ELCA, Nadine led the build-up of the project management office at MeteoSwiss, where he guided and managed numerous projects, including cross-organizational projects in collaboration with various federal offices. Nadine started her professional career in a large consulting company after her masters in Computer Science and a PhD in Neural Networks and AI, both at ETH Zürich.

Meet the author : Nicolas Zahn

Senior Manager - ELCA Advisory

Nicolas Zahn is a Senior Manager in our Zürich office. Nicolas holds a Master of Arts in International Affairs from the Graduate Institute Geneva. During a fellowship program, he dealt intensively with digital transformation in the public sector and completed work stays at the OECD, Singapore, Estonia and a German think tank. At ELCA, his focus is on consulting for digital transformation including AI, covering various aspects from the development of a corresponding strategy, strategic alignment of business and IT, to the management of the implementation of the developed strategies and concepts.

Nadine Tschichold

Practice Leader Public Sector & Professional Services - ELCA Advisory

Nadine Tschichold leads our Public Sector & Professional Services practices. She is committed to driving innovation and digital transformation within the public sector in Switzerland, covering the federal government, cantons and municipalities. Nadine joined ELCA in 2014 and has since been leading countless consulting mandates with public sector focus.